SCIAMA

High Performance Compute Cluster

Using GPUs on SCIAMA

See Submitting Jobs on SCIAMA if you are not already familiar with how to do this.

Using GPUs interactively

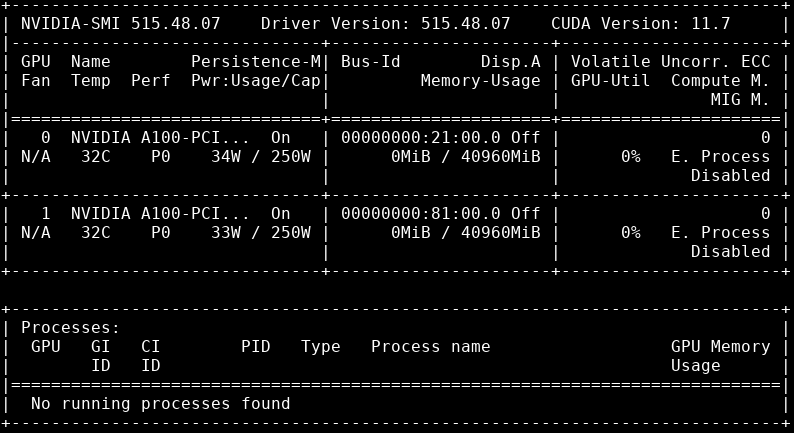

There are four GPU-enabled nodes: gpu01 - gpu04, with 2 A100 GPU's each.

To use them interactively, type:

Submitting batch GPU jobs

To run batch jobs on GPU nodes, ensure your job submission script includes a request for GPUs:

#SBATCH --partition=gpu.q

#SBATCH --gres=gpu:1

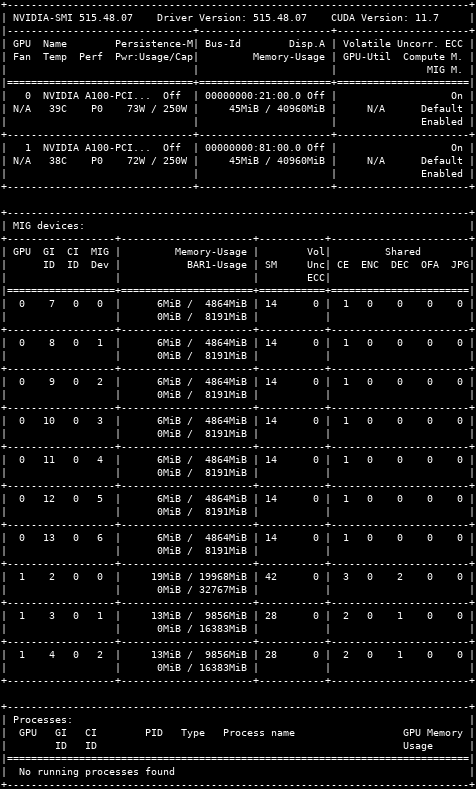

The GPU's on gpu02 are partitioned in to smaller chunks to allow more than one job to run on the GPUs, rather than 1 job taking the whole 40GB GPU.

The GPU's are partitioned as 7x 1g.5gb, 2x 2g.10gb and 1x 3g.20gb. To use a smaller partition state --gres:1g.5gb:1 in the command or --gres=gpu:1g.5gb:1 in a batch file, this will allocate 1x 5GB MIG partition.

You can use multiple partions on one GPU or across 2 GPU's with the following:

The following commands will help to show which MIG's are available and what is currently in use or waiting to be used.

List of GPU's and MIG's are configured:

You can use multiple partitions on one GPU or across 2 GPU's with the following:

#SBATCH --partition=gpu.q

#SBATCH --gres=gpu:1g.5gb:7

#This will use all 7 partitions on one GPU

#SBATCH --gres=gpu:2g.10gb:2,gpu:3g.20gb:1

#This will use the whole of GPU 1

#SBATCH --gres=gpu:2g.10gb:1,gpu:1g.5gb:4

#Uses 1 partition of 10GB on GPU 1 and 4 partitions of 5GB on GPU 0

Output of nvidia-smi on GPU01

Output of nvidia-smi on GPU02