SCIAMA

High Performance Compute Cluster

The Sciama Cluster

The Sciama Cluster has 4288 Compute Cores and 1.8PB of Lustre file storage. It uses QDR Infiniband networking with 100Gb/sec throughput (4x EDR).

All SCIAMA nodes are x86_64 architecture with a mixture of AMD and Intel processors.

Nodes

| Nodes | Processor | CPUs per Node | Memory per Node |

|---|---|---|---|

| Login1-4 | Intel Xeon 2.67GHz CPU | 12 | 23GB |

| Login5-8 | AMD Opteron 2.8GHz CPU | 12 | 15GB |

| node100-195 | Intel Xeon 2.6GHz | 16 | 62GB Shared |

| node200-247 | Intel Xeon E5-260 2.3GHz | 20 | 62GB Shared |

| node300-311 | Intel Xeon Gold 6130 2.1GHz | 32 | 187GB Shared |

| node312-327 | Intel Xeon 2.1GHZ | 32 | 376GB Shared |

| vhmem01 | Intel Xeon E5-2650 2.6GHz | 16 | 503GB |

| node350-351 | AMD EPYC 7662 2Ghz | 128 | 128GB Shared |

| node401-404 | AMD EPYC 7713 2Ghz | 128 | 1TB Shared |

| gpu01-02 | AMD EPYC 7713 2Ghz, with 2 A100 40GB GPUs | 128 | 1TB Shared |

| gpu03-04 | AMD EPYC 7713 2Ghz, with 2 A100 80GB GPUs | 128 | 512GB Shared |

| appserv1 | AMD EPYC 7542 2.9GHz | 32 | 256GB |

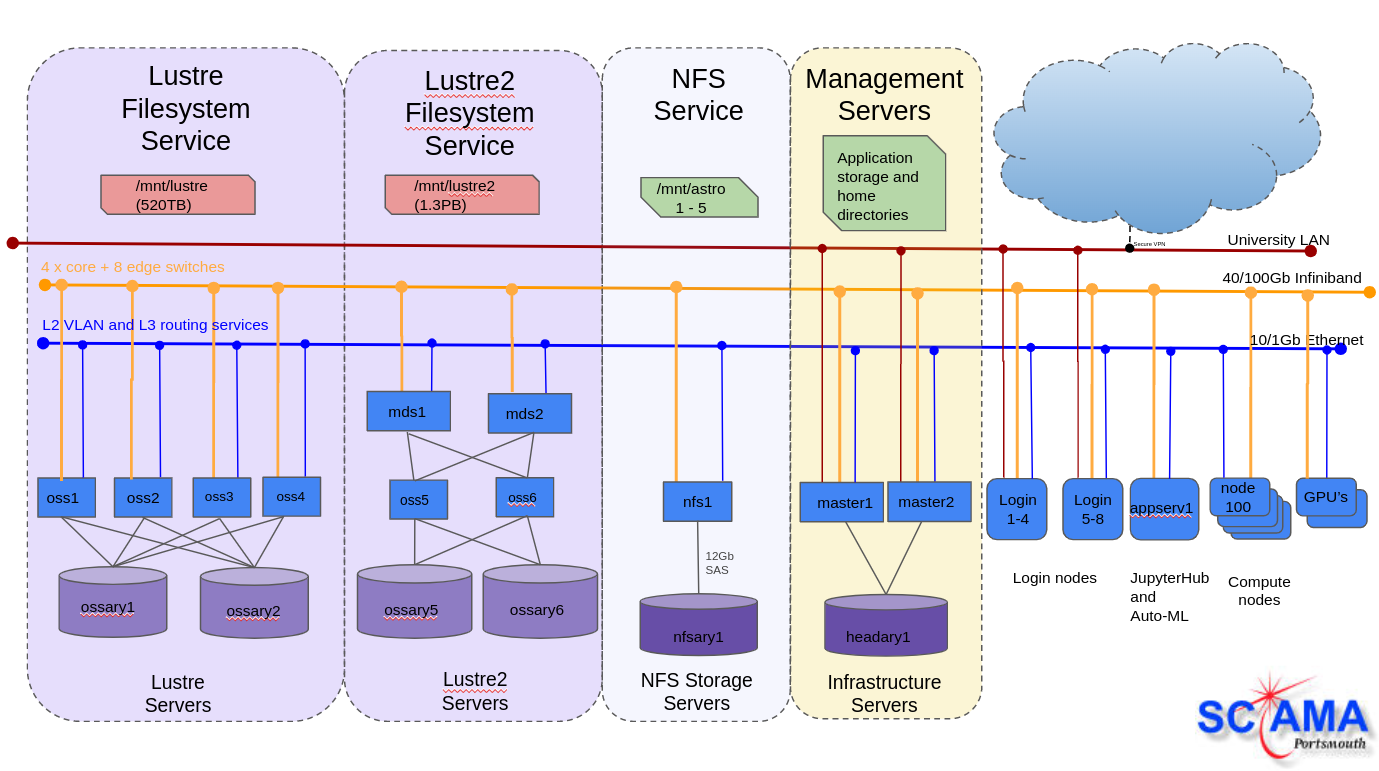

Schematic of Sciama-4 Network

Click on the image below to enlarge.