SCIAMA

High Performance Compute Cluster

Mixing MPI and OpenMP

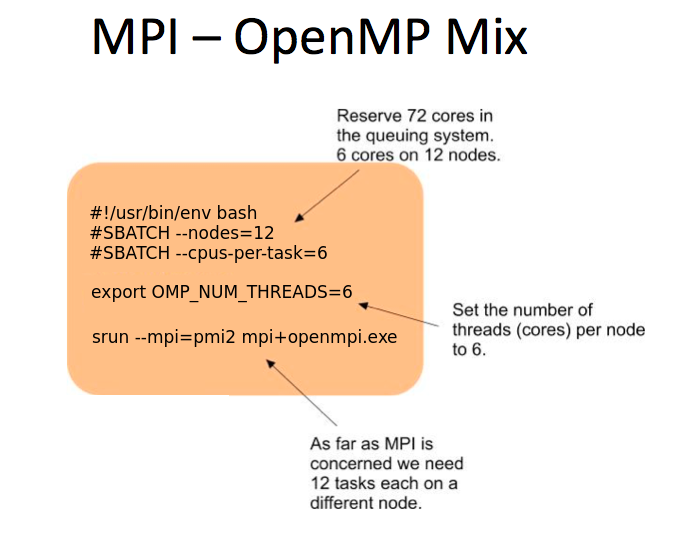

Some applications offer a mix of MPI and OpenMP. In this instance MPI is used to start a process on a distributed node which itself is OpenMP enabled. Unless this is controlled this can potentially overload a node. By default SLURM will only allocate a single core for every task (i.e. MPI process) and OpenMP will use all available cores on a node. The can be fixed by telling SLURM to allocate additional cores to each task by using the '--cpus-per-task' while the number of threads created by OpenMP is limited by setting the environmental variable 'OMP_NUM_THREADS' (hint: Instead of updating the variable manually to the number of requested cores when modified, you can also simply set it to the SLURM env variable $SLURM_CPUS_PER_TASK). Below is an example job script: